Research

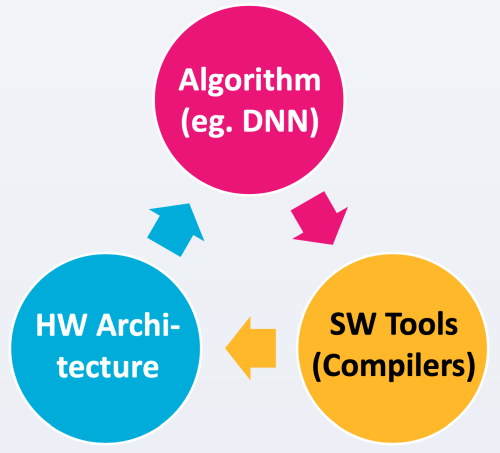

Research at ICCL: Algorithm/HW/SW Co-design for Intelligent SoC

1. Efficient Deep Learning Architectures

2. Hardware-Friendly Deep Neural Networks

3. Architectures and Design Tools for In-Memory Computing Accelerators

4. Architectures and Compilers for Reconfigurable Computing

5. Electronic Design Automation (EDA) and System-Level Design

Recent Projects

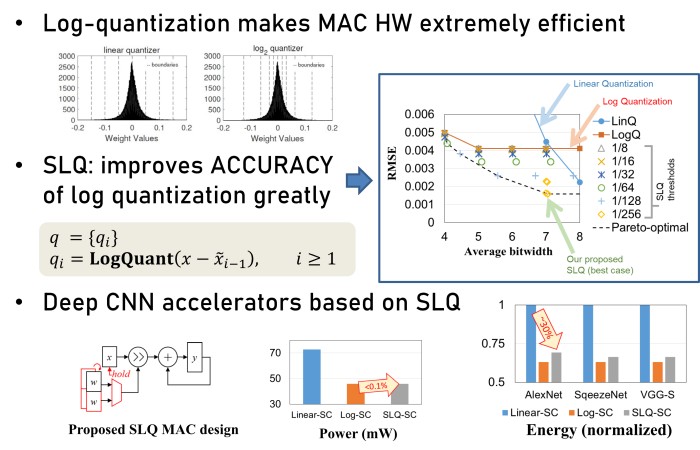

1. Hardware-friendly quantization for efficient DNN accelerators

Quarry: Quantization-based ADC Reduction for ReRAM-based Deep Neural Network Accelerators, Azat Azamat, Faaiz Asim and Jongeun Lee**, Proc. of International Conference on Computer-Aided Design (ICCAD), November, 2021.

Automated Log-Scale Quantization for Low-Cost Deep Neural Networks, Sangyun Oh, Hyeonuk Sim, Sugil Lee and Jongeun Lee**, Proc. of Conference on Computer Vision and Pattern Recognition (CVPR), June, 2021.

RRNet: Repetition-Reduction Network for Energy Efficient Depth Estimation, Sangyun Oh, Hye-Jin S. Kim, Jongeun Lee and Junmo Kim, IEEE Access, 8, pp. 106097-106108, IEEE, June, 2020.

Successive Log Quantization for Cost-Efficient Neural Networks Using Stochastic Computing, Sugil Lee, Hyeonuk Sim, Jooyeon Choi and Jongeun Lee**, Proc. of the 56th Annual ACM/IEEE Design Automation Conference (DAC), pp. 7:1-7:6, June, 2019.

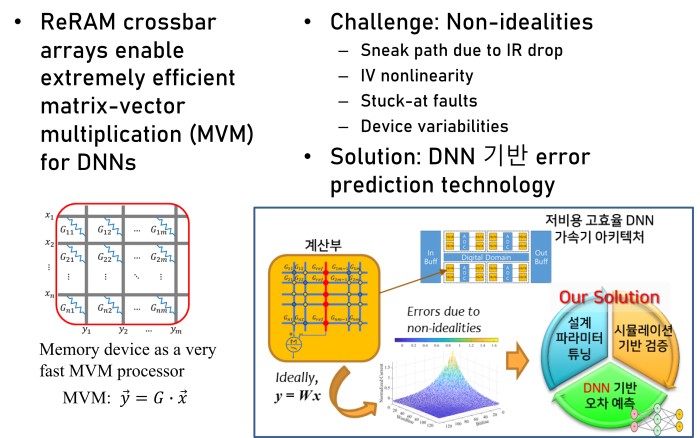

2. In-Memory Computing DNN Hardware Using Emerging Memory

Fast and Low-Cost Mitigation of ReRAM Variability for Deep Learning Applications, Sugil Lee, Mohammed Fouda, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of International Conference on Computer Design (ICCD), October, 2021.

Quarry: Quantization-based ADC Reduction for ReRAM-based Deep Neural Network Accelerators, Azat Azamat, Faaiz Asim and Jongeun Lee**, Proc. of International Conference on Computer-Aided Design (ICCAD), November, 2021.

Cost- and Dataset-free Stuck-at Fault Mitigation for ReRAM-based Deep Learning Accelerators, Giju Jung, Mohammed Fouda, Sugil Lee, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of Design, Automation and Test in Europe (DATE), pp. 1733-1738, February, 2021.

IR-QNN Framework: An IR Drop-Aware Offline Training of Quantized Crossbar Arrays, Mohammed E. Fouda, Sugil Lee, Jongeun Lee, Gun Hwan Kim, Fadi Kurdahi and Ahmed Eltawil, IEEE Access, 8, pp. 228392-228408, IEEE, December, 2020.

Architecture-Accuracy Co-optimization of ReRAM-based Low-cost Neural Network Processor, Segi Lee, Sugil Lee, Jongeun Lee**, Jong-Moon Choi, Do-Wan Kwon, Seung-Kwang Hong and Kee-Won Kwon, Proc. of the 30th ACM Great Lakes Symposium on VLSI (GLSVLSI), pp. 427-432, September, 2020.

Learning to Predict IR Drop with Effective Training for ReRAM-based Neural Network Hardware, Sugil Lee, Mohammed Fouda, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of the 57th Annual ACM/IEEE Design Automation Conference (DAC), pp. 1-6, July, 2020.

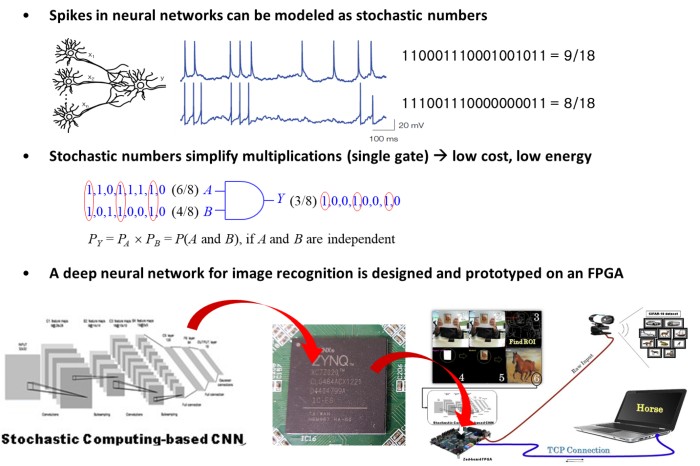

3. Deep Neural Network based on Stochastic Computing

Bitstream-based Neural Network for Scalable, Efficient and Accurate Deep Learning Hardware, Hyeonuk Sim and Jongeun Lee**, Frontiers in Neuroscience, 14, pp. 1198, Frontiers, December, 2020.

Cost-effective Stochastic MAC Circuits for Deep Neural Networks, Hyeonuk Sim and Jongeun Lee**, Neural Networks, 117, pp. 152-162, Elsevier, September, 2019.

Successive Log Quantization for Cost-Efficient Neural Networks Using Stochastic Computing, Sugil Lee, Hyeonuk Sim, Jooyeon Choi and Jongeun Lee**, Proc. of the 56th Annual ACM/IEEE Design Automation Conference (DAC), pp. 7:1-7:6, June, 2019.

Log-Quantized Stochastic Computing for Memory and Computation Efficient DNNs, Hyeonuk Sim and Jongeun Lee**, Proc. of the 24th Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 280-285, January, 2019.

An Efficient and Accurate Stochastic Number Generator Using Even-distribution Coding, Aidyn Zhakatayev, Kyounghoon Kim, Jongeun Lee** and Kiyoung Choi, IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD), 37(12), pp. 3056-3066, December, 2018.

DPS: Dynamic Precision Scaling for Stochastic Computing-Based Deep Neural Networks, Hyeonuk Sim, Saken Kenzhegulov and Jongeun Lee**, Proc. of the 55th Annual ACM/IEEE Design Automation Conference (DAC), pp. 13:1-13:6, June, 2018.

Sign-Magnitude SC: Getting 10X Accuracy for Free in Stochastic Computing for Deep Neural Networks, Aidyn Zhakatayev, Sugil Lee, Hyeonuk Sim and Jongeun Lee**, Proc. of the 55th Annual ACM/IEEE Design Automation Conference (DAC), pp. 158:1-158:6, June, 2018.

FPGA Implementation of Convolutional Neural Network Based on Stochastic Computing, Daewoo Kim, Mansureh S. Moghaddam, Hossein Moradian, Hyeonuk Sim, Jongeun Lee** and Kiyoung Choi, Proc. of IEEE International Conference on Field-Programmable Technology (FPT), pp. 287-290, December, 2017.

Accurate and Efficient Stochastic Computing Hardware for Convolutional Neural Networks, Joonsang Yu, Kyounghoon Kim, Jongeun Lee* and Kiyoung Choi, Proc. of IEEE International Conference on Computer Design (ICCD), pp. 105-112, November, 2017.

A New Stochastic Computing Multiplier with Application to Deep Convolutional Neural Networks, Hyeonuk Sim and Jongeun Lee**, Proc. of the 54th Annual ACM/IEEE Design Automation Conference (DAC), pp. 29:1-29:6, June, 2017.

Scalable Stochastic-Computing Accelerator for Convolutional Neural Networks, Hyeonuk Sim, Dong Nguyen, Jongeun Lee** and Kiyoung Choi, Proc. of the 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), pp. 696-701, January, 2017.

See Research Archive for more.

Check out the front page also, which features interesting news from our lab.