In a computer system employing on-chip accelerator processors such as streaming co-processor or reconfigurable hardware co-processor, it is vital to minimize communication overhead lest the communication overhead cancel out any performance improvement by the co-processor accelerator.

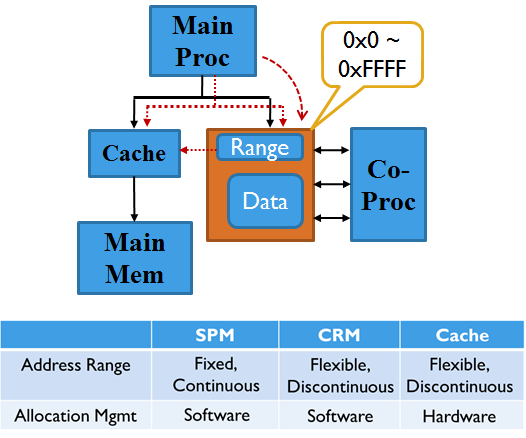

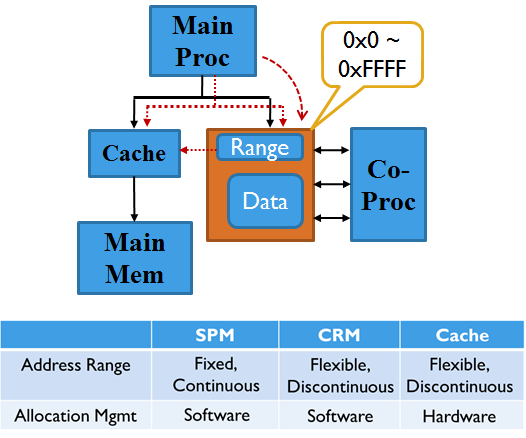

Our first cut approach to this problem, tailored to reconfigurable hardware co-processors that we’ve been working with, is an on-chip shared memory that is little more intelligent than just a scratch-pad memory but less so than a cache. We call it Configurable Range Memory (CRM). Here is an article introducing its basic ideas.

Application-specific hardware and reconfigurable processors can dramatically speed up compute-intensive kernels of applications, offloading the burden of main processor. To minimize the communication overhead in such a coprocessor approach, the two processors can share an on-chip memory, which may be considered by each processor as a scratchpad memory. However, this setup poses a significant challenge to the main processor, which now must manage data on the scratchpad explicitly, often resulting in superfluous data copy. This paper presents an enhancement to scratchpad, called Configurable Range Memory (CRM), that can reduce the need for explicit management and thus reduce data copy and promote data reuse on the shared memory. Our experimental results using benchmarks from DSP and multimedia applications demonstrate that our CRM architecture can significantly reduce the communication overhead compared to the architecture without shared memory, while not requiring explicit data management.

Read the full text via this.

Device miniaturization is causing significant problems in semiconductor reliability. One particularly nasty problem is what is called transient fault — transient as opposed to permanent because these kinds of faults or errors happen only temporarily. So you may experience this kind of error one time, but you may not experience the same error when you do the same operation again, thus no reproducibility. This can pose a very serious challenge to “testing”, and equally challenging is how to mitigate the effects of such transient errors at runtime. The question that is traditionally asked is i) how to detect such errors and ii) how to correct computation once they are detected.

A very different approach to the same problem is, to try to reduce the rate of such errors.. say to 1/100 times, because if the errors happen very rarely it may not be a problem. This can be done as easily as by recompiling the program… Sounds intriguing? For m0re detail, please check this out: “A compiler optimization to reduce soft errors in register files,” ACM SIGPLAN Notices, Vol. 44, No. 7, pp. 41-49, by Jongeun Lee and Aviral Shrivastava, 2009.

Register file (RF) is extremely vulnerable to soft errors, and traditional redundancy based schemes to protect the RF are prohibitive not only because RF is often in the timing critical path of the processor, but also since it is one of the hottest blocks on the chip, and therefore adding any extra circuitry to it is not desirable. Pure software approaches would be ideal in this case, but previous approaches that are based on program duplication have very significant runtime overheads, and others based on instruction scheduling are only moderately effective due to local scope. We show that the problem of protecting registers inherently requires inter-procedural analysis, and intra-procedural optimization are ineffective. This paper presents a pure compiler approach, based on inter-procedural code analysis to reduce the vulnerability of registers by temporarily writing live variables to protected memory. We formulate the problem as an integer linear programming problem and also present a very efficient heuristic algorithm. Our experiments demonstrate that our proposed technique can reduce the vulnerability of the RF by 33~37% on average and up to 66%, with a small 2% increase in runtime. In addition, our overhead reduction optimizations can effectively reduce the code size overhead, by more than 40% on average, to a mere 5~6%, as compared to highly optimized binaries.

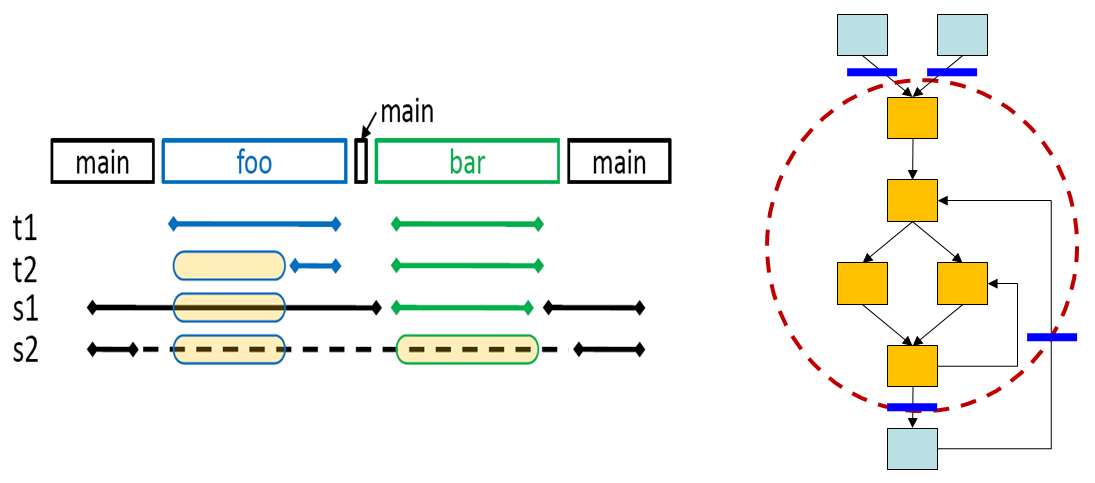

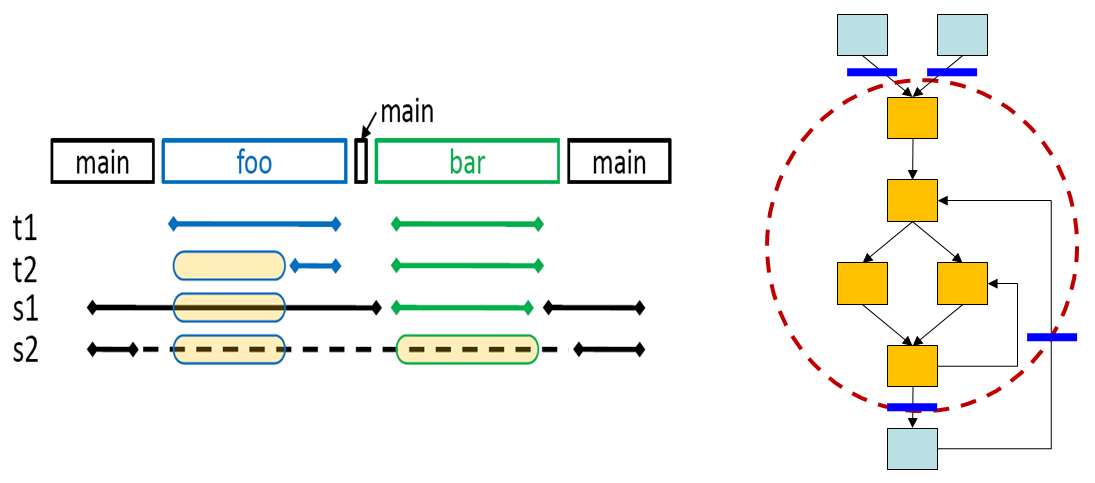

Dynamic vs. Static view of a program. Transient error can be best defined/understood in the dynamic view (left) of the program, but compilers can only see the static view (right), thus the challenge of this approach.

We presented a first approach to optimizing software for the memory architecture of the target reconfigurable computing system, in HiPEAC 2010, held in Pisa, Italy. The motivation of this work is that often in multimedia applications the performance bottleneck is in data transfer, not in computation per se. While previously we have tried only to maximize computation rate only, it may be better to sacrifice computation rate a little to increase data transfer rate if there is a trade-off between computation and data transfer as in the case of CGRA mapping. We targeted our compiler for a ADRES-like architecture, with a slightly simplified local memory subsystem: double-buffered, multi-banked, and the banks of the local memory are one-to-one mapped to the load-store units of the reconfigurable architecture.

Coarse-Grained Reconfigurable Arrays (CGRAs) are a very promising platform, providing both, up to 10-100 MOps/mW of power efficiency and are software programmable. However, this cardinal promise of CGRAs critically hinges on the effectiveness of application mapping onto CGRA platforms. While previous solutions have greatly improved the computation speed, they have largely ignored the impact of the local memory architecture on the achievable power and performance. This paper motivates the need for memory-aware application mapping for CGRAs, and proposes an effective solution for application mapping that considers the effects of various memory architecture parameters including the number of banks, local memory size, and the communication bandwidth between the local memory and the external main memory. Our proposed solution achieves 62% reduction in the energy-delay product, which factors into about 47% and 28% reduction in the energy consumption and runtime, respectively, as compared to memory-unaware mapping for realistic local memory architectures. We also show that our scheme scales across a range of applications, and memory parameters.

Read the full paper: “Memory-Aware Application Mapping on Coarse-Grained Reconfigurable Arrays,” Lecture Notes in Computer Science (HiPEAC ’10), Vol. 5952, pp. 171-185, by Yongjoo Kim, Jongeun Lee, Aviral Shrivastava, Jonghee W. Yoon and Yunheung Paek, 2010.

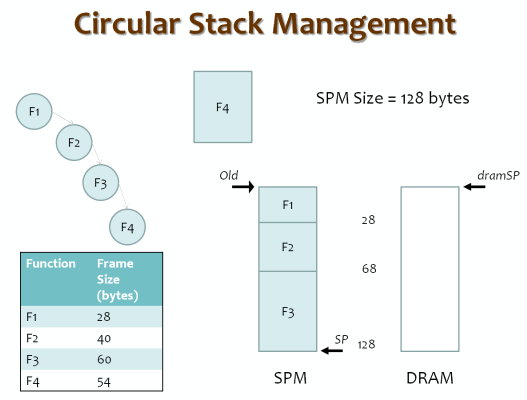

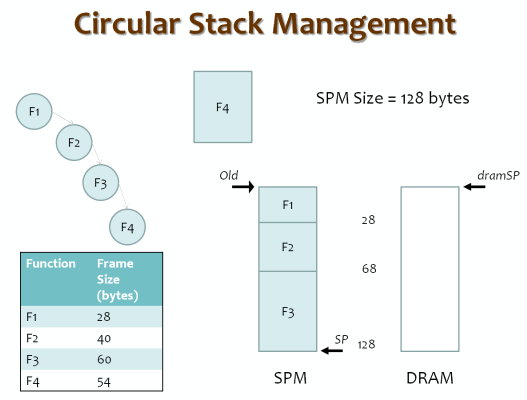

A nice Stack Management technique for a limited local memory architecture like IBM Cell, is presented at ASP-DAC and in IEEE Trans. CAD in 2009. The pointer problem was extremely difficult to handle correctly, but we managed to do it, at least from the correctness point of view.

A dynamic scratch pad memory (SPM) management scheme for program stack data with the objective of processor power reduction is presented. Basic technique does not need the SPM size at compile time, does not mandate any hardware changes, does not need profile information, and seamlessly integrates support for recursive functions. Stack frames are managed using a software SPM manager, integrated into the application binary, and shows average energy savings of 32% along with a performance improvement of 13%, on benchmarks from MiBench. SPM management can be further optimized and made pointer-safe, by knowing the SPM size.

Read the full paper: “A Software-Only Solution to Use Scratch Pads for Stack Data,” by Aviral Shrivastava, Arun Kannan, and Jongeun Lee*, published in IEEE Transactions on CAD, vol. 28, no. 11, pp. 1719-1727, November 2009.