Hardware-friendly quantization for efficient DNN accelerators

Quarry: Quantization-based ADC Reduction for ReRAM-based Deep Neural Network Accelerators, Azat Azamat, Faaiz Asim and Jongeun Lee**, Proc. of International Conference on Computer-Aided Design (ICCAD), November, 2021.

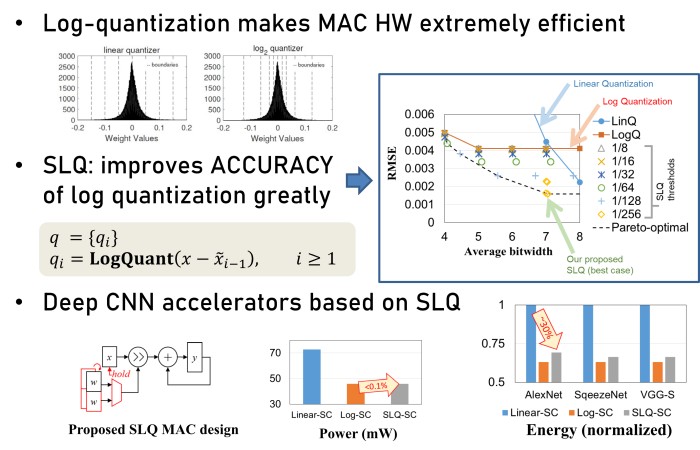

Automated Log-Scale Quantization for Low-Cost Deep Neural Networks, Sangyun Oh, Hyeonuk Sim, Sugil Lee and Jongeun Lee**, Proc. of Conference on Computer Vision and Pattern Recognition (CVPR), June, 2021.

RRNet: Repetition-Reduction Network for Energy Efficient Depth Estimation, Sangyun Oh, Hye-Jin S. Kim, Jongeun Lee and Junmo Kim, IEEE Access, 8, pp. 106097-106108, IEEE, June, 2020.

Successive Log Quantization for Cost-Efficient Neural Networks Using Stochastic Computing, Sugil Lee, Hyeonuk Sim, Jooyeon Choi and Jongeun Lee**, Proc. of the 56th Annual ACM/IEEE Design Automation Conference (DAC), pp. 7:1-7:6, June, 2019.