Welcome party

Welcome, new members in CSE! We’ll have a welcome party today for people in CSE labs. Both newcomers and old members are welcomed.

Welcome, new members in CSE! We’ll have a welcome party today for people in CSE labs. Both newcomers and old members are welcomed.

Parallel processing, or multi-core compilation, is rather a challenge than a blessing, since it means the era of free ride is over. When the hardware performance kept doubling every 18 months, the same software could be run twice as before by running it on a new hardware. But now it is no longer true, unless the software can somehow exploit the increased parallelism by the new machine.

There are many challenges including how to compile applications, or in particular, how to map code and data onto various heterogeneous as well as homogeneous processor cores, and manage them efficiently. The management must include aspects of not only performance optimization, but of thermal management, energy and power optimization (such as Dynamic Voltage and Frequency Scaling), and reliability (such as soft error resilience). These multi-dimensional, multi-objective problems require innovative ideas and approaches on architecture, compiler, computer-aided design, operating system, and algorithm levels.

In ICCL Lab, we are particularly looking at data management problems for distributed memory architectures such as the Cell processor architecture, where simple scratchpad memories are used instead of power-hungry caches to save power.

Figure 1: Sony/Toshiba/IBM Cell.

Processor Idle Cycle Aggregation (PICA) is a promising approach for low power execution of processors, in which small memory stalls are aggregated to create a large one, and the processor is switched to low-power mode in it. We extend the previous proposed approach in two dimensions. i) We develop static analysis for the PICA technique and present optimum parameters for five common types of loops based on steady-state analysis. ii) We show that software only control is unable to guarantee its correctness in a varying runtime environment, potentially causing deadlocks. We enhance the robustness of PICA with minimal hardware extension, ensuring correct execution for any loops and parameters, which greatly facilitates exploration based parameter optimization. The combined use of our static analysis and exploration based fine-tuning makes the PICA technique applicable, to any memory-bound loop, with energy reduction. We validate our analytical models against simulation based optimization and also show through our experiments on embedded application benchmarks, that our technique can be applied to a wide range of loops with average 20% energy reductions compared to executions without PICA.

Two U-WURF students have visited HPC lab for one month. Mr. Ahn explored ASIP (Application-Specific Instruction-set Processor) design approach to accelerate the speed of software-based solutions. Mr. Lee demonstrated the power of GPU computing for general computing (so called GPGPU) for two applications, ie., matrix multiplication and genetic algorithm. Congrats for successfully completing the U-WURF program. Some of the details of their research can be found in the HPC wiki.

Chang Won Lee participated in U-WURF 2010. His wiki page.

Byung Jun Ahn participated in U-WURF 2010. His wiki page.

Introduction to GPU Super-computing Programming.

This 3+ hour seminar will cover the following topics:

1. What is GPU Programming

2. Scalar Processor vs. Vector Processor

3. Clustering/Clustered Computer programming

4. Vector Programming

5. CUDA

6. OpenCL

When & where: Fri 1/14, 2 ~ 6 pm. @ E205

Device miniaturization is causing significant problems in semiconductor reliability. One particularly nasty problem is what is called transient fault — transient as opposed to permanent because these kinds of faults or errors happen only temporarily. So you may experience this kind of error one time, but you may not experience the same error when you do the same operation again, thus no reproducibility. This can pose a very serious challenge to “testing”, and equally challenging is how to mitigate the effects of such transient errors at runtime. The question that is traditionally asked is i) how to detect such errors and ii) how to correct computation once they are detected.

A very different approach to the same problem is, to try to reduce the rate of such errors.. say to 1/100 times, because if the errors happen very rarely it may not be a problem. This can be done as easily as by recompiling the program… Sounds intriguing? For m0re detail, please check this out: “A compiler optimization to reduce soft errors in register files,” ACM SIGPLAN Notices, Vol. 44, No. 7, pp. 41-49, by Jongeun Lee and Aviral Shrivastava, 2009.

Register file (RF) is extremely vulnerable to soft errors, and traditional redundancy based schemes to protect the RF are prohibitive not only because RF is often in the timing critical path of the processor, but also since it is one of the hottest blocks on the chip, and therefore adding any extra circuitry to it is not desirable. Pure software approaches would be ideal in this case, but previous approaches that are based on program duplication have very significant runtime overheads, and others based on instruction scheduling are only moderately effective due to local scope. We show that the problem of protecting registers inherently requires inter-procedural analysis, and intra-procedural optimization are ineffective. This paper presents a pure compiler approach, based on inter-procedural code analysis to reduce the vulnerability of registers by temporarily writing live variables to protected memory. We formulate the problem as an integer linear programming problem and also present a very efficient heuristic algorithm. Our experiments demonstrate that our proposed technique can reduce the vulnerability of the RF by 33~37% on average and up to 66%, with a small 2% increase in runtime. In addition, our overhead reduction optimizations can effectively reduce the code size overhead, by more than 40% on average, to a mere 5~6%, as compared to highly optimized binaries.

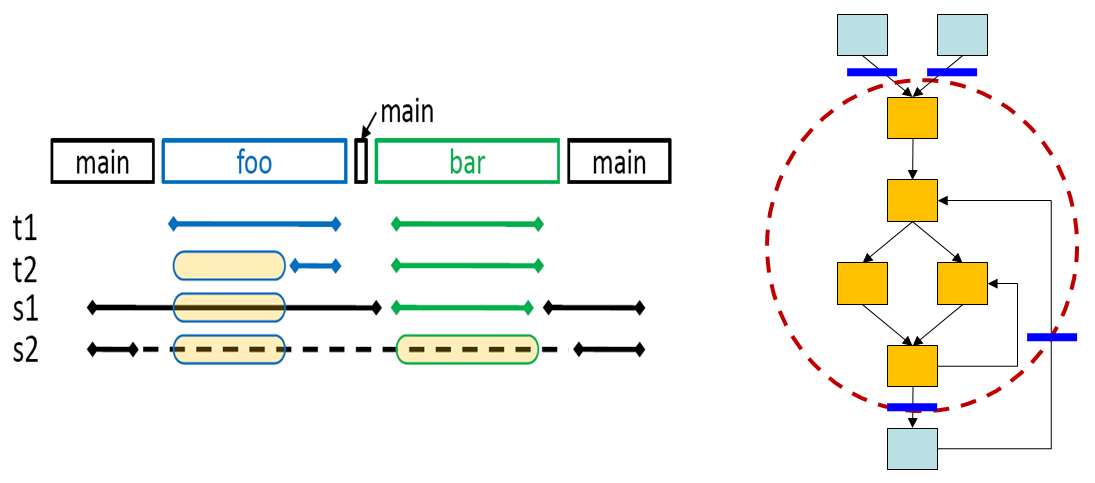

Dynamic vs. Static view of a program. Transient error can be best defined/understood in the dynamic view (left) of the program, but compilers can only see the static view (right), thus the challenge of this approach.

The best method, which is also recommended by Michael Shell, is to use this:

\enlargethispage{-X.Yin}

somewhere at the top of the first column of the last page. The last page gets effectively shortened by the “X.Yin” amount.

HPC and the Excluded Middle

By Daniel Reed October 24, 2010

I have repeatedly been told by both business leaders and academic researchers that they want “turnkey” HPC solutions that have the simplicity of desktop tools but the power of massively parallel computing. Such desktop tools would allow non-experts to create complex models quickly and easily, evaluate those models in parallel, and correlate the results with experimental and observational data. Unlike ultra-high-performance computing, this is about maximizing human productivity rather than obtaining the largest fraction of possible HPC platform performance. Most often, users will trade hardware performance for simplicity and convenience. This is an opportunity and a challenge, an opportunity to create domain-specific tools with high expressivity and a challenge to translate the output of those tools into efficient, parallel computations.

via HPC and the Excluded Middle | blog@CACM | Communications of the ACM.