In-Memory Computing DNN Hardware Using Emerging Memory

Fast and Low-Cost Mitigation of ReRAM Variability for Deep Learning Applications, Sugil Lee, Mohammed Fouda, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of International Conference on Computer Design (ICCD), October, 2021.

Quarry: Quantization-based ADC Reduction for ReRAM-based Deep Neural Network Accelerators, Azat Azamat, Faaiz Asim and Jongeun Lee**, Proc. of International Conference on Computer-Aided Design (ICCAD), November, 2021.

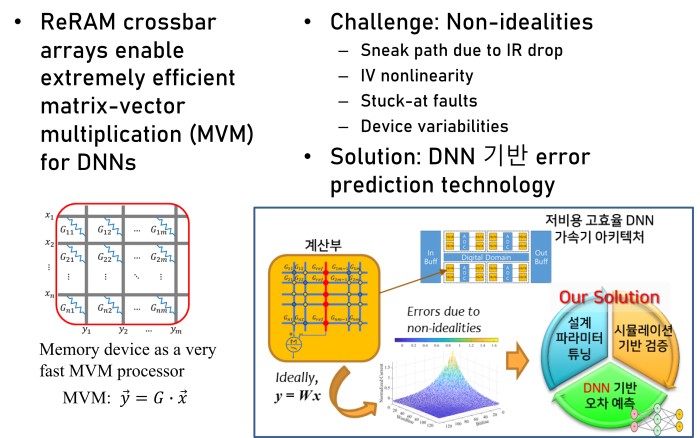

Cost- and Dataset-free Stuck-at Fault Mitigation for ReRAM-based Deep Learning Accelerators, Giju Jung, Mohammed Fouda, Sugil Lee, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of Design, Automation and Test in Europe (DATE), pp. 1733-1738, February, 2021.

IR-QNN Framework: An IR Drop-Aware Offline Training of Quantized Crossbar Arrays, Mohammed E. Fouda, Sugil Lee, Jongeun Lee, Gun Hwan Kim, Fadi Kurdahi and Ahmed Eltawil, IEEE Access, 8, pp. 228392-228408, IEEE, December, 2020.

Architecture-Accuracy Co-optimization of ReRAM-based Low-cost Neural Network Processor, Segi Lee, Sugil Lee, Jongeun Lee**, Jong-Moon Choi, Do-Wan Kwon, Seung-Kwang Hong and Kee-Won Kwon, Proc. of the 30th ACM Great Lakes Symposium on VLSI (GLSVLSI), pp. 427-432, September, 2020.

Learning to Predict IR Drop with Effective Training for ReRAM-based Neural Network Hardware, Sugil Lee, Mohammed Fouda, Jongeun Lee**, Ahmed Eltawil and Fadi Kurdahi, Proc. of the 57th Annual ACM/IEEE Design Automation Conference (DAC), pp. 1-6, July, 2020.