PCNN (Pulse-Coded Neural Networks) : A modeled network system which is for the evaluation of a biology-oriented image processing, usually performed on general-purpose computers, e. g. PCs or workstations.

SNNs(Spiking Neural Networks) : A neural network model. In addition to neuronal and synaptic state, SNNs also incorporate the concept of time into their operating model.

SEE(Spiking Neural Network Emulation Engine) : A field-programmable gate array(FPAG) based emulation engine for spiking neurons and adaptive synaptic weights is presented, that tackles bottle-neck problem by providing a distributed memory architecture and a high bandwidth to the weight memory.

PCNN – Operated by PC & workstation -> Time consuming

– Because of bottle-neck : sequential access weight memory

FPGA SEE – Distribute memory

– High bandwidth weight memory

– separating calculation neuron states & network topology

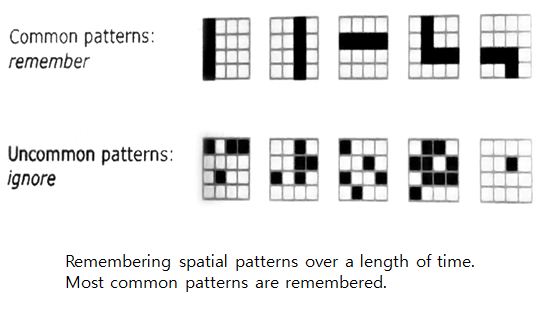

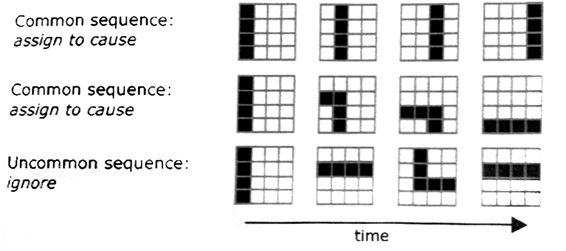

SNNs or PCNNs 1. Reproduce spike or pulse.

2. Perform some problems such as vision tasks.

Problem of Large PCNNs 1. Calculation steps.

2. Communication resources.

3. Load balancing.

4. Storage capacity.

5. Memory bandwidth.

Spiking neuron model with adaptive synapses.

Non-leaky integrate-and-fire neuron(IFN)

,

,  ,

,

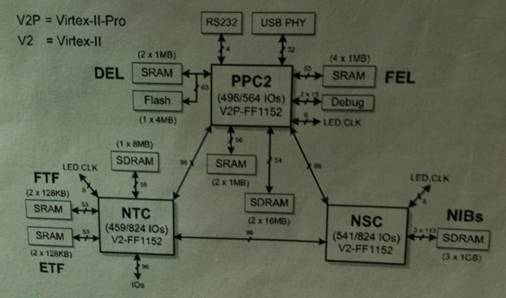

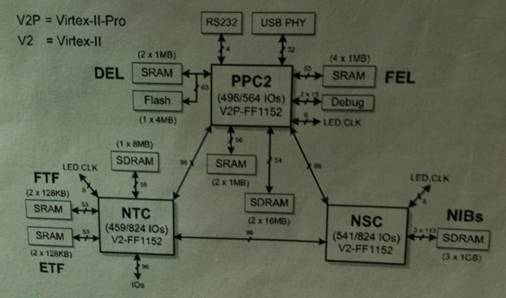

Overview of the SEE architecture

Overview of SEE architecture

A. Simulation control(PPC2) 1. Configuration of network

2. Monitoring of network parameter.

3. Administration of event-list.

– Two event-lists : DEL(Dynamic Event-List) includes all excited neurons that receive

a spike or an external input current.

FEL(Fire Event-List) stores all firing neurons that are in a spike

sending state and the corresponding time values when the

neuron enters the spike receiving state again.

B. Network Topology Computation(NTC)

– Topology-vector-phase : The presynaptic activity is determined for each excited neuron.

– Topology-update-phase : The tag-fields are updated according to occurred spike start-events or spike stop-events.

C. Neuron State Computation(NSC)

– Neuron-spike-phase : It is determined if before the next spike stop-event an excited

neuron will start to fire.

– Neuron –update-phase

– Bulirsch_Stoer method of integration.(MMID, PZEXTR)

– Modified-midpoint integration(MMID)

– Polynomial extrapolation(PZEXTR)

PCB of spiking neural network emulation engine

Performance analysis

| n |

NNEURON |

NBSSTEP |

TSW |

TSEE |

FSPEED-UP |

| 4 |

32X32 |

98717 |

1405 s |

45 s |

31.2 |

| 48X48 |

222365 |

6527 s |

226 s |

28.9 |

| 64X64 |

420299 |

22620 s |

758 s |

29.8 |

| 80X80 |

721463 |

65277 s |

2032 s |

32.1 |

| 96X96 |

926458 |

119109 s |

3757 s |

31.7 |

| 8 |

32X32 |

107276 |

1990 s |

63 s |

31.6 |

| 48X48 |

235863 |

7263 s |

312 s |

23.3 |

| 64X64 |

413861 |

31548 s |

972 s |

32.5 |

| 80X80 |

645694 |

80378 s |

2370 s |

33.9 |

| 96X96 |

967572 |

142834 s |

5113 s |

29.9 |

– Reference

Emulation Engine for Spiking Neurons and Adaptive Synaptic Weights by H. H. Hellmich, M. Geike, P. Griep, P. Mahr, M. Rafanelli and H. Klar.