HTM (Hierarchical Temporal Memory)

•HTM(Hierarchical Temporal Memory) : Not programmed & not different algorithms for different problem.

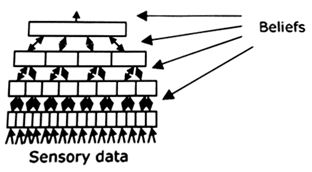

1) Discover cause

– Find relationships at inputs.

– Possible cause is called “belief”.

2) Infer causes of novel input

– Inference : Similar to pattern recognition

– Ambiguous -> Flat.

– HTMs handle novel input both during inference & training

3) Make predictions

– Each node store sequences of patterns

+ current input -> Predict what would happen next.

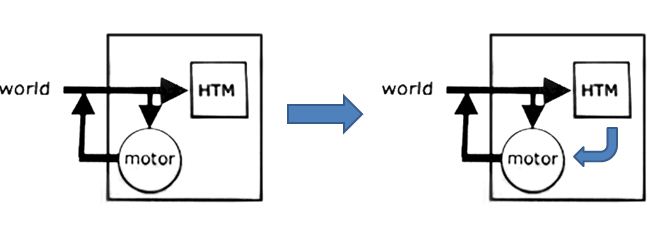

4) Direct behavior : Interact with world.

How do HTMs discover and infer causes?

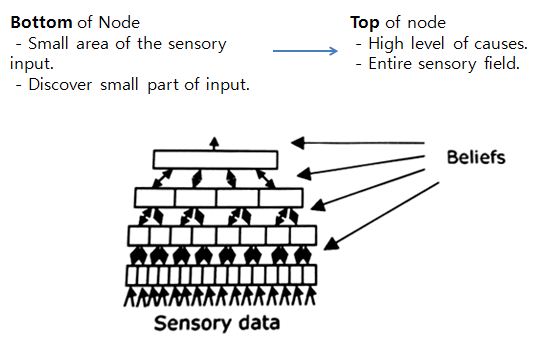

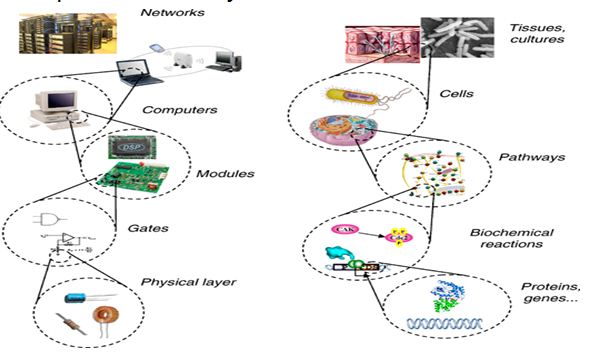

Why is a hierarchy important?

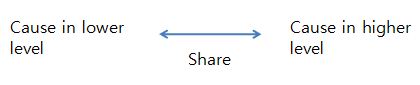

1) Shared representations lead to generalization and storage efficiency.

2) The hierarchy of HTM matches the spatial and temporal hierarchy of the real world.

3) Belief propagation ensures all nodes quickly reach the best mutually compatible beliefs.

– Belief propagation calculates the marginal distribution for

each unobserved node, conditional on any observed nodes.

4) Hierarchical representation affords mechanism for attention

How does each node discover and infer causes?

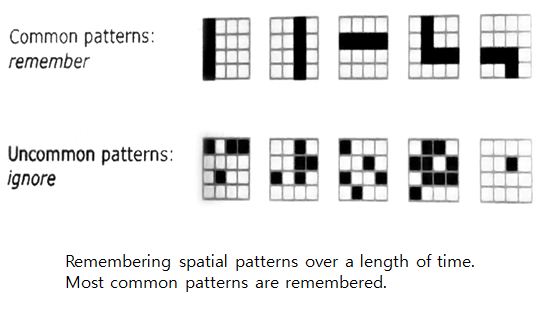

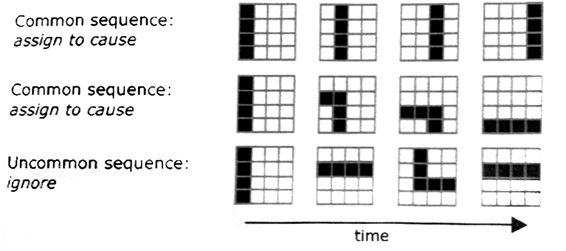

Assigning causes.

Most common sequence of pattern are assigned.

Assigned causes are used for prediction, behavior etc.

Why is time necessary to learn?

•Pooling(many-one) method

– Overlap

– Learning of sequence : HTM uses this way.