You are here: Foswiki>Main Web>SimpleScalar>ResearchTopics>ReconfigurableComputingReadingList>Cardoso2010 (28 Mar 2011, ToanMai)Edit Attach

Compiling for Reconfigurable Computing: A Survey

Introduction

- Reconfigurable Computing (RC) platforms are promising because:

- accelerating computations through the concurrent nature of HW structures

- ability of those HW architectures for HW customization.

- However effectively programming is a big challenge & error-prone process

- programmers must assume the role of HW designers & master HDLs

- limiting the acceptance & dissemination of the technology.

- The survey focuses on major research efforts mapping computations written in imperative programming languages to RC

- Why?

- There is currently a lack of robust automatic compilation from standard software programming languages which is vital for the success of RC

- High-Level Synthesis (HLS) tools developed for ASICs do not take the characteristics of RC into consideration.

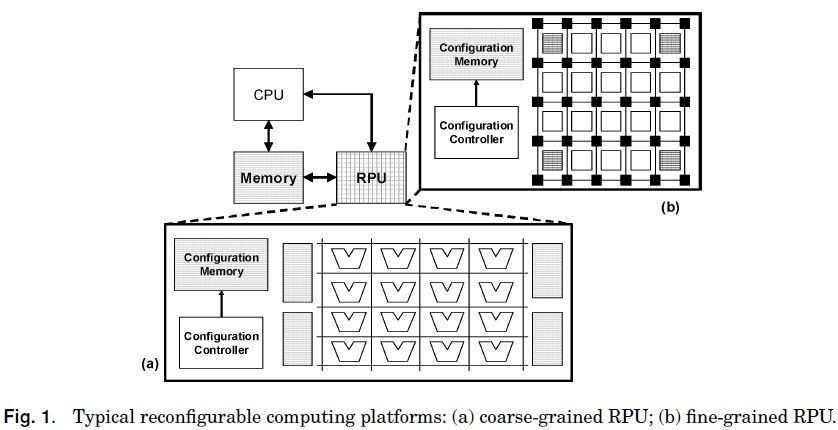

Reconfigurable Architectures

- Reconfigurable computing systems tipycally based on:

- Reconfigurable processing units (RPUs) as co-processor units

- A host system.

- Type of Interconnection between RPUs and host system & granularity of the RPU leads to wide variety of possible reconfigurable architectures -> focus on specific architectures

- The type of coupling of the RPUs to the existent computing system has a significant impact on the communication cost -> classified into the 3 groups in decreasing order of cost:

- RPUs coupled to the host bus (Xputer, SPLASH, RAW, etc.).

- RPUs tightly coupled to the host processor: (Garp, PipeRench, RaPiD, NAPA, REMARC, etc.).

- RPUs has autonomous execution & access to the system memory

- In most architectures, while RPU is executing the host processor is in stall mode.

- RPUs like an extended datapath (reconfigurable function units) controlled by special opcodes of the host processor instruction-set (Chimaera, PRISC, OneChip, ConCISe).

Overview of Compilation Flows

- Front-end: decouple specific aspects of the input programming language -> intermediate representation (IR)

- Middle-end:

- architecture-neutral transformations: constant folding, subexpression elimination, etc.

- architecture-driven transformations: loop transformations, bit-width narrowing.

- -> expose specialized data types and operations & parallelism opportunities.

- Back-end:

- Schedules macro-operations & instructions

- Performs low-level steps of mapping, placement & routing (P&R)

Edit | Attach | Print version | History: r7 < r6 < r5 < r4 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r7 - 28 Mar 2011, ToanMai

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback